Have you heard the big news? 😱

Last week, we offered a first-look at how we're testing integrating OpenAI's new o1 models with GitHub Copilot. OpenAI o1 is a new series of reasoning models focused on solving hard problems, particularly in areas like math, science, and coding. And that makes it a perfect fit for us to experiment with at GitHub.

These models, consisting of o1-preview and o1-mini, are really exciting because they were trained to solve problems much like a human would: by thinking things through, trying different strategies, and even recognizing mistakes. OpenAI says the new o1-preview model correctly solved 83% of the questions for the qualifying exam for the International Mathematics Olympiad, compared to just 13% of problems for the prior GPT-4o model (making the new models 538% more effective).

Although these models are still in preview, we’re already thinking about how they can be utilized in tools like GitHub Copilot, and our early experiments are promising.

In this newsletter, we’ll describe some scenarios that showcase the new model’s capabilities within Copilot and how they can help you code more effectively – plus how you can try it out for yourself. Let’s go!

1. Algorithm optimization 🎯

As a developer, sometimes you need to write or refine complex algorithms in order to come up with efficient or innovative solutions. As part of this work, you’ll need to understand the constraints, optimize edge cases, and iteratively improve the algorithm, all without losing track of the overall objective.

And this is exactly the type of scenario where the o1-preview model outperforms. We created a new code optimization workflow that takes advantage of the model’s reasoning capabilities. The built-in Optimize chat command provides a rich editor context out of the box, with things like imports, tests, and performance profiles.

We used a real problem faced by the VS Code team for this task: trying to optimize the performance of a byte pair encoder used in Copilot Chat’s tokenizer library. (So yes, we used AI to optimize our AI development. 😎)

The results were really impressive, especially when it comes to edge cases, because of how o1-preview’s reasoning features allow a deeper understanding of the code’s constraints. And that ultimately means that better performing code is being written. Oh, and Copilot’s optimization improved performance by almost fifteen percent!

2. Performance bug fixing 🐛

Another scenario we wanted to explore with o1-preview was its ability to identify and develop a solution for performance bugs. So we pointed Copilot Chat, powered by o1-preview, at a real bug that had previously plagued one of our software engineers and asked for suggestions.

Here’s the bug: we were adding a new folder tree to the file view on GitHub.com, but the number of elements on the page was causing some of our code to stall, leading to a browser crash.

In the example below, you can see the difference between fixing that performance bug with Copilot Chat that uses GPT-4o versus Copilot Chat powered by o1-preview.

With GPT-4o, Copilot Chat gave us suggestions in a single block of code, but didn’t give us a solution for our bug. But when we used Copilot Chat with o1-preview, it gave us suggestions in structured steps with a clear chain of thought, and one of those suggestions was a search function that solved our performance bug!

Without using Copilot and o1-preview, it was hard for our engineers to isolate the problem, because the code managed 1,000 elements and was quite complex. And while our engineers were eventually able to implement a change that improved the runtime of the function from more than 1,000ms to about 16ms, it took several hours to accomplish. We got the same suggestion from o1-preview, meaning that we could have identified and fixed the problem much more quickly using Copilot.

Try it for yourself 🧪

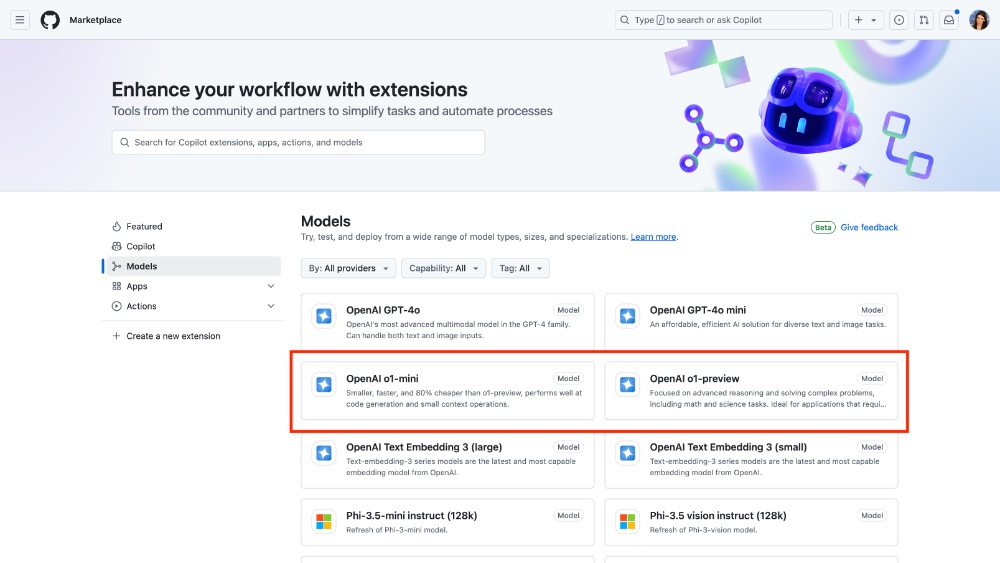

While we’re still experimenting with how we can integrate the o1 models into GitHub Copilot, we also want to see what you might build with this new model series! As a result, we’re bringing the o1 series to GitHub Models, our playground for a smorgasbord of AI models (including other models from OpenAI, as well as Meta, Microsoft, Cohere and Mistral, and others).

GitHub Copilot users can sign-up for a waitlist to access o1-preview and o1-mini in Copilot Chat. We can’t wait to see what you build! And stay tuned as we start to integrate and experiment with o1 models throughout GitHub!

Sign up for the o1 models waitlist

✨ This newsletter was written by Christina Warren and produced by Gwen Davis. ✨

More to explore 🌎

Join our GitHub Copilot conversations 🤖

Visit our community forum to see what people are saying + offer your own two cents.

Stay updated on GitHub products 📦

Discover the latest ships, launches, and improvements in our Changelog.

Subscribe to our LinkedIn newsletter 🚀

Do your best work on GitHub. Subscribe to our LinkedIn newsletter, Branching Out_.

Attend GitHub Universe 🪐

Experience the world’s fair of software at the historic Fort Mason Center, October 29-20.